- The latest tweets from @rectlabel.

- RectLabel; LabelMe; OpenCV also has some facilitation for annotating. Annotation is the more common name used in software suites for tools that facilitate adding labels to images and frames of movies because.

My current goal is to train an ML model on the COCO Dataset. Then be able to generate my own labeled training data to train on. So far, I have been using the maskrcnn-benchmark model by Facebook and training on COCO Dataset 2014.

Arcgis Label Features

RectLabel An image annotation tool to label images for bounding box object detection and segmentation. 'RectLabel - One-time payment' is a paid up-front version.

Here my Jupyter Notebook to go with this blog.

Okay here’s an account of what steps I took.

Getting the data

The COCO dataset can be download here

I am only training on the 2014 dataset.

I’m working with this project:

And, it must be linked to the correct directories in order to use it with the Github project:

Training

Here are some training commands. These worked. Funny enough, the first command is for 720,000 iterations and it reported that it was going to take 3+ days to complete on my GTX 1080Ti. Also, I could only load 2 images at a time and it took up 10 out of 11GB of memory. This is a big model!

1-16-2019

1-17-2019

Some Things that maskrcnn does really well

everything’s a hyper param

logging - for lots of feedback

single tqdm output for training

Rectlabel

COCO Dataset format notes

Things that I learned about the COCO dataset that will be important in the future for training my own datasets with this format are:

Images

Image annotations have this format:

Annotations

Annotations have this format:

segmentation explained:

I was confused how the segmentation above was converted to a mask. The segmentation is a list of x,y points. In this format: [x1, y1, x2, y2, etc..] In this code block, the segmentation list of points is reshaped to [(x1,y1), (x2, y2), ..] and is then usable by matplotlib

poly becomes an np.ndarray of shape (N, 2) where N is the number of segmentation points.

bbox explained:

bbox is of format [x1, y1, x2, y2]. The bounding box points start at the top left of the image as point (0,0). The x1,y1 offset is from the (0,0) starting point, where the y size goes down, since it’s starting from the top left. Then the x2,y2 values are offsets from the x1,y1 points.

Conclusion

I’ve now learned 2 datasets. Pascal and COCO. Now I know a little more why most projects doing image tasks support both.

What’s Next

Next I want to label my own data and train on it. The last section of the notebook is my attempt at this using RectLabel

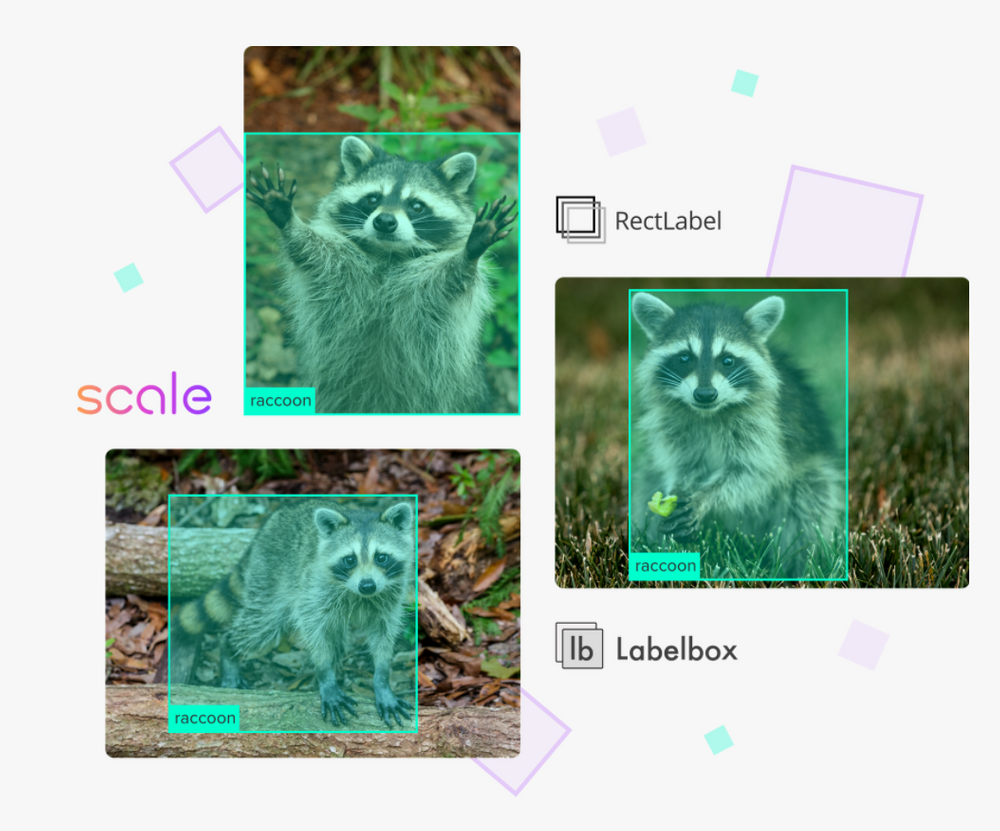

I reviewed 3 different applications for labeling data:

- Labelbox

- RectLabel

- Labelme

My criteria for evaluating is that it should be free and I should be able to run the program locally and label my own data as I please. User friendly is better obviously.

If I can’t find something, then maybe I have to create a simple app for labeling data. Definitely doable, but it’d be a detour.

Labelbox seems like it used to be open source, but they turned it into a SaaS, and I couldn’t get it to run.

RectLabel is $5 which isn’t bad, but it didn’t generate the segmentation data in the format that I need.

Labelme seems exactly what I am looking for. Open source. There isn’t a script for exporting to COCO Dataset 2014 format, so maybe this is an opportunity to contribute as well :)

So labeling my own data and training on it is the next step. Okay, until next time.

Random… Extra

Some random notes about things learned when doing this.

commands

count files in a dir - in order to check that the file count matches what was expected, or when the zip file didn’t fully download, or to check the image file count vs. expected.

wget in background with no timeout, so I can start the job from my laptop, but process runs as a daemon on the DL machine

fastjar

If a zip file didn’t fully download, fastjar can be used to unzip it.

Trying to unzip the file will give you:

Use fastjar if the whole zip file didn’t download:

nvidia-smi

equivalent to “tail nvidia-smi”

Rectlabel

Manual image annotation is the process of manually defining regions in an image and creating a textual description of those regions. Such annotations can for instance be used to train machine learning algorithms for computer vision applications.

This is a list of computer software which can be used for manual annotation of images.

| Software | Description | Platform | License | References |

|---|---|---|---|---|

| Computer Vision Annotation Tool (CVAT) | Computer Vision Annotation Tool (CVAT) is a free, open source, web-based annotation tool which helps to label video and images for computer vision algorithms. CVAT has many powerful features: interpolation of bounding boxes between key frames, automatic annotation using TensorFlow OD API and deep learning models in Intel OpenVINO IR format, shortcuts for most of critical actions, dashboard with a list of annotation tasks, LDAP and basic authorizations, etc. It was created for and used by a professional data annotation team. UX and UI were optimized especially for computer vision annotation tasks. | JavaScript, HTML, CSS, Python, Django | MIT License | [1][2][3] |

| ImageTagger | An online platform for collaborative image labeling. It allows bounding box, polygon, line and point annotations and includes user, image and annotation management, annotation verification and customizable export formats. | Python (Django), JavaScript, HTML, CSS | MIT License | [4][5][6][7][8][9][10] |

| LabelMe | Online annotation tool to build image databases for computer vision research. | Perl, JavaScript, HTML, CSS[11] | MIT License | [12] |

| RectLabel | An image annotation tool to label images for bounding box object detection and segmentation.[13] | macOS | Custom License | [12][14] |

| VGG Image Annotator (VIA) | VIA is a simple and standalone manual annotation tool for images, audio and video. This is a light weight, standalone and offline software package that does not require any installation or setup and runs solely in a web browser. The VIA software allows human annotators to define and describe spatial regions in images or video frames, and temporal segments in audio or video. These manual annotations can be exported to plain text data formats such as JSON and CSV and therefore are amenable to further processing by other software tools. VIA also supports collaborative annotation of a large dataset by a group of human annotators. The BSD open source license of this software allows it to be used in any academic project or commercial application.[15] | JavaScript, HTML, CSS[16] | BSD-2 clause license | [15][17][18] |

| VoTT (Visual Object Tagging Tool) | Free and open source electron app for image annotation and labeling developed by Microsoft. | TypeScript/Electron (Windows, Linux, macOS) | MIT License | [19][20][21][22][23][24] |

References[edit]

Rectlabel Python

- ^'Intel open-sources CVAT, a toolkit for data labeling'. VentureBeat. 2019-03-05. Retrieved 2019-03-09.

- ^'Computer Vision Annotation Tool: A Universal Approach to Data Annotation'. software.intel.com. 2019-03-01. Retrieved 2019-03-09.

- ^'Computer Vision Annotation Tool (CVAT) source code on github'. Retrieved 3 March 2019.CS1 maint: discouraged parameter (link)

- ^'ImageTagger source code on github'. Retrieved 25 July 2020.CS1 maint: discouraged parameter (link)

- ^Marzahl, C.; Aubreville, M.; Bertram, C. (2020), EXACT: A collaboration toolset for algorithm-aided annotation of almost everything, arXiv:2004.14595

- ^Fiedler, N.; Bestmann, M.; Hendrich, N. (2018), ImageTagger: Open Source Online Platform for Image Labeling

- ^WF Wolves – Humanoid KidSizeTeam Description for RoboCup 2020(PDF), retrieved 26 July 2020

- ^24 Best Image Annotation Tools for Computer Vision, retrieved 26 July 2020

- ^Scheunemann, M.; van Dijk, S.; Miko, R. (2019), Bold HeartsTeam Description for RoboCup 2019, arXiv:1904.10066

- ^Bator, M.; Maciej, P. (2019). 'Image Annotating Tools for Agricultural Purpose: a Requirements Study'(PDF). Machine Graphics and Vision. 28.

- ^'LabelMe Source'. Retrieved 26 January 2017.CS1 maint: discouraged parameter (link)

- ^ ab'Reducing the Pain: A Novel Tool for Efficient Ground-Truth Labelling in Images'(PDF). Auckland University of Technology. Retrieved 2018-10-13.

- ^'RectLabel support page'. Retrieved 29 March 2017.CS1 maint: discouraged parameter (link)

- ^'Faster R-CNN-Based Glomerular Detection in Multistained Human Whole Slide Images'. The University of Tokyo Hospital. Retrieved 2018-07-04.

- ^ abDutta, Abhishek; Zisserman, Andrew (2019). 'The VIA Annotation Software for Images, Audio and Video'. Proceedings of the 27th ACM International Conference on Multimedia: 2276–2279. arXiv:1904.10699. Bibcode:2019arXiv190410699D. doi:10.1145/3343031.3350535. ISBN9781450368896. S2CID173188066.

- ^'Visual Geometry Group / via'. GitLab. Retrieved 2019-02-05.

- ^'Easy Image Bounding Box Annotation with a Simple Mod to VGG Image Annotator'. Puget Systems. Retrieved 2019-02-05.

- ^Loop, Humans in the (2018-10-30). 'The best image annotation platforms for computer vision (+ an honest review of each)'. Hacker Noon. Retrieved 2019-02-05.

- ^Tung, Liam. 'Free AI developer app: IBM's new tool can label objects in videos for you'. ZDNet.

- ^Bornstein, Aaron (Ari) (February 4, 2019). 'Using Object Detection for Complex Image Classification Scenarios Part 4:'. Medium.

- ^Solawetz, Jacob (July 27, 2020). 'Getting Started with VoTT Annotation Tool for Computer Vision'. Roboflow Blog.

- ^'Best Open Source Annotation Tools for Computer Vision'. www.sicara.ai.

- ^'Beyond Sentiment Analysis: Object Detection with ML.NET'. September 20, 2020.

- ^'GitHub - microsoft/VoTT: Visual Object Tagging Tool: An electron app for building end to end Object Detection Models from Images and Videos'. November 15, 2020 – via GitHub.

Rectlabel Download